Reynald Oliveria

March 30, 2022

A World Full of Bugs

An insight into the buggy world of programming

We all enjoy watching Gordon Ramsay cook. It seems so effortless. He doesn’t have to measure out what he uses. The steaks all come off the pan a perfect medium rare. All the meat comes off the bone so cleanly when he prepares them.

But all of us who have tried to cook at home knows that it’s never that perfect for us. We might overcook and must start over. We might make a sauce too spicy and must water it down. At the very least, we taste before we serve, and we adjust based on what we taste. Even Gordon Ramsay would taste and adjust ever so often.

Mistakes, and adjusting to the mistakes we make, are just too real to be in movies or on TV. We don’t want to see what we see in everyday life. We want some idealized version, like Gordon’s flawless preparation of this delicate lobster.

It’s exactly the same for coding. What we see in movies and on TV is an idealized simplification. However, unlike cooking, coding is something that most of us never have to deal with. So, most of us only ever see coding in this simplified light.

We are familiar with coding scenes like when Elliot just frantically types up some code to hack FBI phones in Mr. Robot. In that four-minute scene, we see him write up several different functions. Think of functions in code like different components of a dish. In the same way that the sauce, the steak, the fries, the salad, the dressing, the soup, and the dessert come together to make an entire meal (often having more than one dish), functions come together to form a computer program.

What we never see is Elliot trying to run these functions to see if they work.

Testing whether pieces of code work and the subsequent adjusting of the code is a process known as debugging. It is the equivalent of tasting and adjusting what you are cooking. Naturally, debugging ought to be done as you write code.

Just like how large you cut your meat affects how long it cooks in the stew, the code you write before affects how the code you are going to write performs. And just like how making sure your cuts of meat is of the right size before putting it in the stew makes the cooking process easier, making sure that the code you have written works right after you wrote it makes the coding process easier.

In the clip Elliot never does this. We never see him click a button and sit and wait to see if what he wrote works. We just see his frantic typing of new code. But, maybe debugging just happens so sparsely that Elliot simply did not code enough to debug.

So how much do programmers actually debug?

In a project proposing a debugging product coming from the Judge Business School in the University of Cambridge, programmers say they spend nearly an average of 50% of their time debugging. Another study by researchers from George Mason University (GMU) found that, in a controlled setting, a developer can spend upwards of 95% of their time debugging. In other words, half the time, programmers are not making something new. They are fixing something that is broken.

From personal experience, debugging is much more difficult and much more frustrating than just coding up new features for a computer program. An essay published in the Association for Computing Machinery (ACM) suggests that the reason for this difficulty is the fact that we cannot always think precisely in the same way a computer wants to process things. To compensate, programmers create an approximate computer model that isn’t totally accurate. This is where bugs spawn.

In fact, the study from GMU also found that when attempting to develop new features, developers find themselves debugging their code 40% of the time. Some programming environments do not help with avoiding bugs. Take a look at this MATLAB code:

This little piece of code figures out how many ways to organize some number of objects. Like, how many ways can I change the order I do homework for three classes. If we put 3 in for the letter “l” in that code, then it would tell us. But, it turns out, that this piece of code never gives an answer. The programmer here confused the letter “l” and the number one. And they’ve made a mistake that makes this piece of code run forever.

We see that even if the programmer has the right idea, other factors, like their programming environment, can lead to bugs. Some bugs can even be quite dangerous. In one of my first programming courses, we were warned that if we made a mistake like in the code above, it might shut down the server in which all students worked. In other words, if one person makes such a mistake, it will shut down the server so that nobody in the university can work on anything on that server.

But the bugs that need the most time to be debugged, and some of the most dangerous ones, are ones that a developer never even conceived of. The GMU study also found that fixing bugs that they did not make took 8 times longer on average than fixing bugs they inserted.

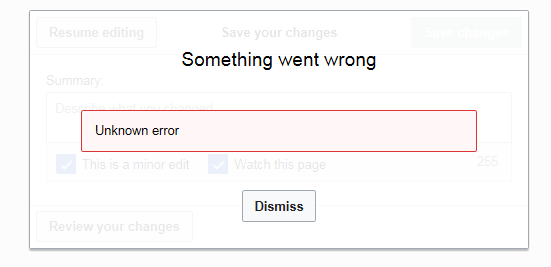

Many bugs also do not come to light until the users of a program begin using it. After all, it is very difficult for a developer to think of all the possible things a user might do with their program. An extreme example of this is the Log4J vulnerability. As described in this Wired article, there is a bug in a some code that is used by many other software which can be exploited. A user, through this bug, can send an email with a carefully written message which can steal data from a system.

With unpredictable user interactions with software, programming environments that make it easy to make mistakes, lack of consonance when collaborating, and our inherent inability to process information like computers do, the world of coding is filled with bugs. When looking at the screen of someone coding, they probably are debugging or writing (unknowingly) some bug into their code. But somehow, we never see these bugs on television.